Rethinking Trust: My Concern with Niche AI Tools

As AI rapidly integrates into our daily lives, we're seeing an explosion of specialized tools promising to simplify every conceivable task. I've been experimenting with several of these niche AI applications, from productivity boosters to detailed note-taking solutions. But amidst the convenience, a lingering discomfort has surfaced.

Increasingly, each new AI tool asks for more permissions, more access, and a deeper dive into our personal and professional data. This pattern has started to feel problematic. Each tool, though helpful, wants complete trust—often at the cost of our privacy and digital security.

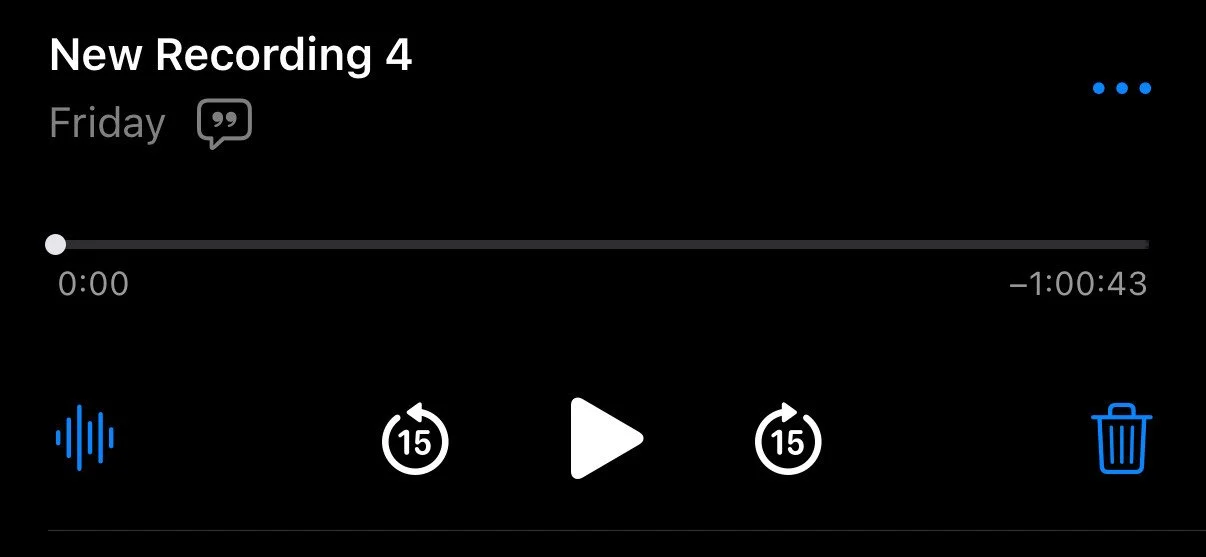

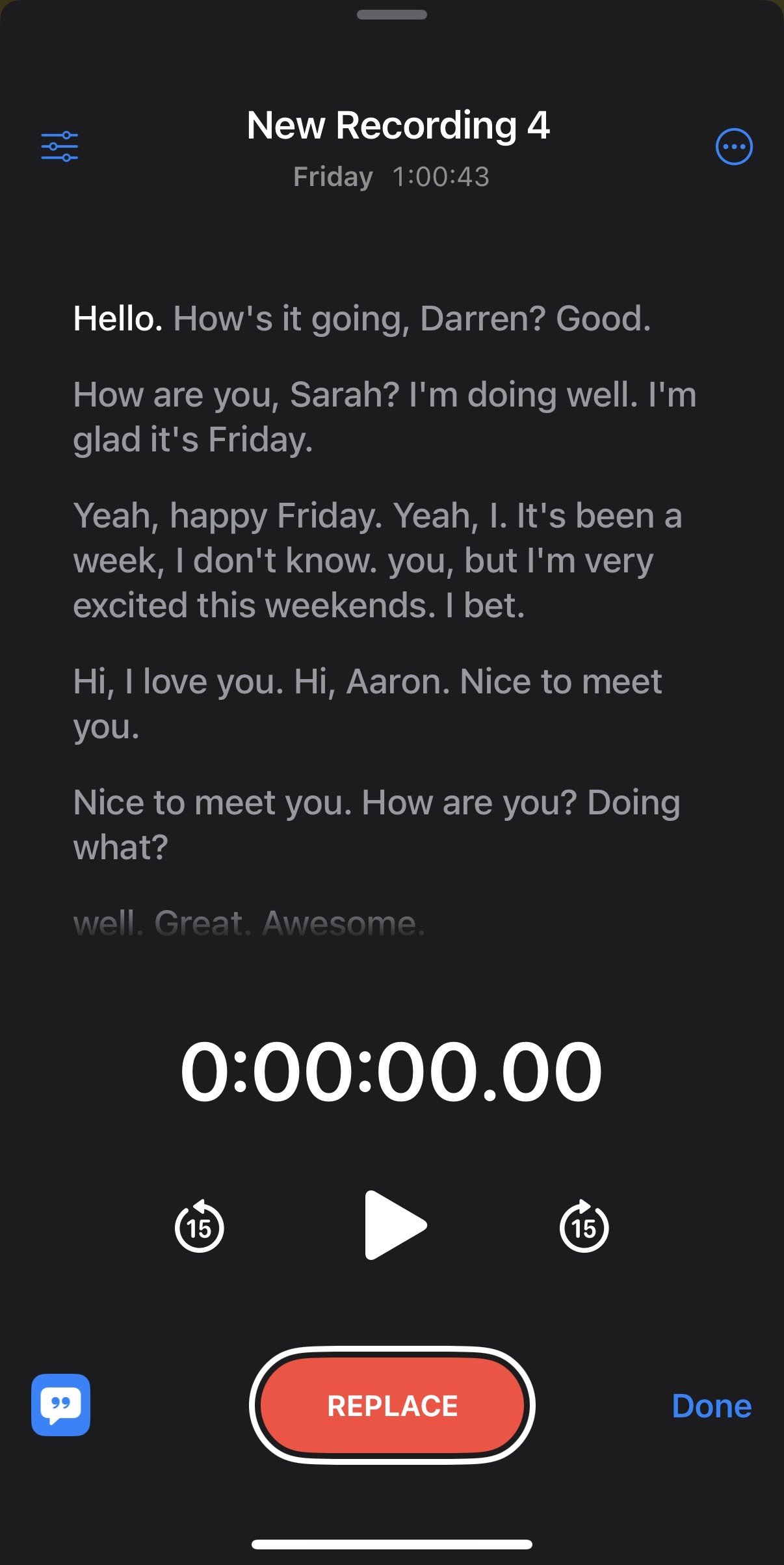

Feeling uneasy, I recently chose a different approach. Rather than embracing specialized meeting note-taking tools—which usually request extensive access—I reverted to a more straightforward solution using Apple's built-in voice recording app. This native app has a simple yet robust transcription feature, allowing me to directly feed meeting transcripts into ChatGPT. The result? I only have to trust one proven AI provider with my data.

This approach offers another benefit: consistency. By using a single trusted AI platform for transcription, summarization, and insights, I maintain control over the quality and accuracy of responses without managing multiple disconnected tools. I avoid the redundant effort of training numerous separate AI systems, each with varying reliability.

Reflecting on this, I suspect the future of practical AI won't lie in dozens of specialized, single-purpose apps. Instead, we'll move toward comprehensive, multi-modal AI solutions that handle multiple functions within a unified, trusted environment. The leap of faith to give AI access to intimate parts of our lives is significant enough—expecting users to hand their data over to multiple niche providers may prove unrealistic.

For now, my choice is clear: simplify and consolidate, choosing carefully which digital assistant earns my trust.